Batch Normalization

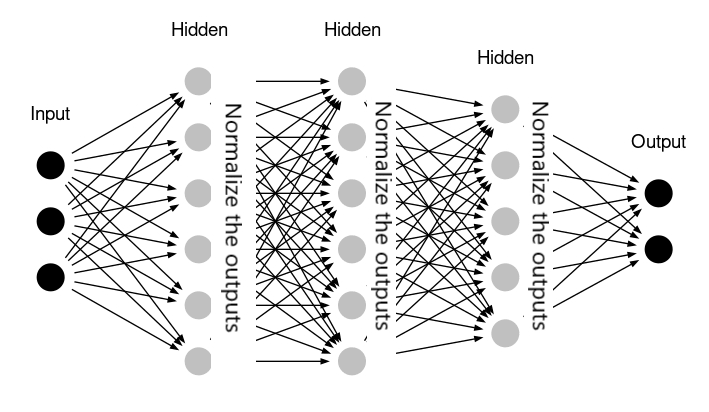

TLDRBatch Normalization is a technique used to improve the training of deep neural networks by addressing issues such as internal covariate shift. It offers benefits such as improved optimization, regularization, reduced sensitivity to initialization, and the ability to train deeper networks.

•3m read time

Table of contents

What is Batch Normalization?Understanding Internal Covariate ShiftHow Batch Normalization WorksBenefits of Batch NormalizationBatch Normalization During InferenceChallenges and ConsiderationsConclusionReferencesBe the first to comment.