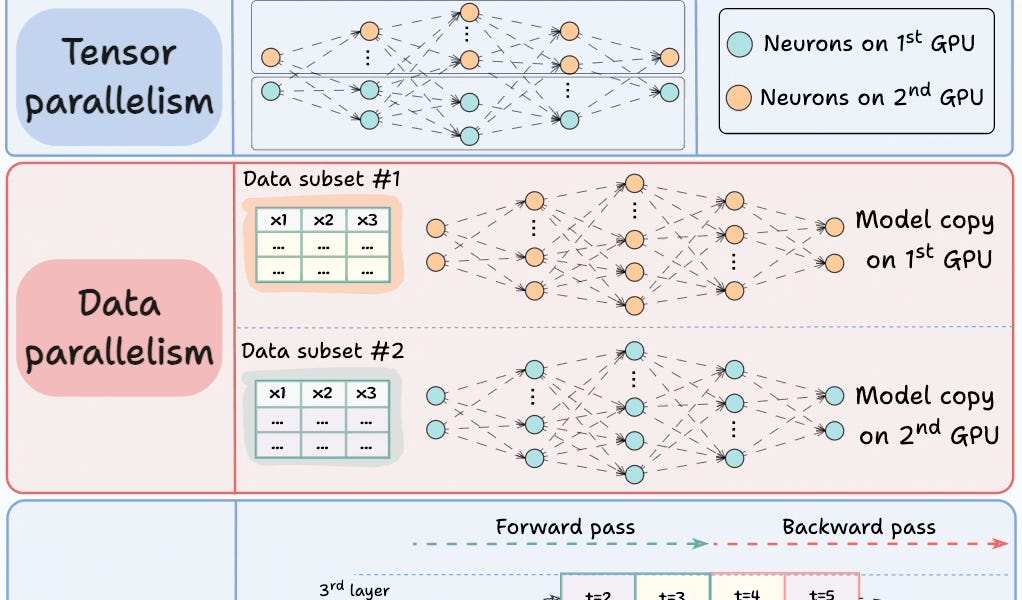

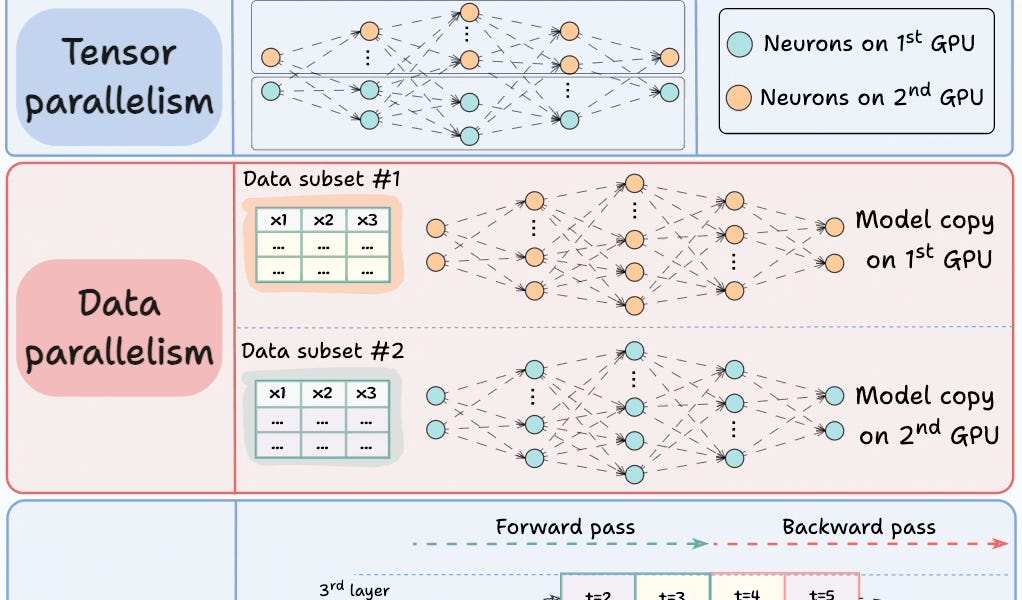

4 Strategies for Multi-GPU Training

TLDRThis post discusses four strategies for multi-GPU training: model parallelism, tensor parallelism, data parallelism, and pipeline parallelism.

TLDRThis post discusses four strategies for multi-GPU training: model parallelism, tensor parallelism, data parallelism, and pipeline parallelism.